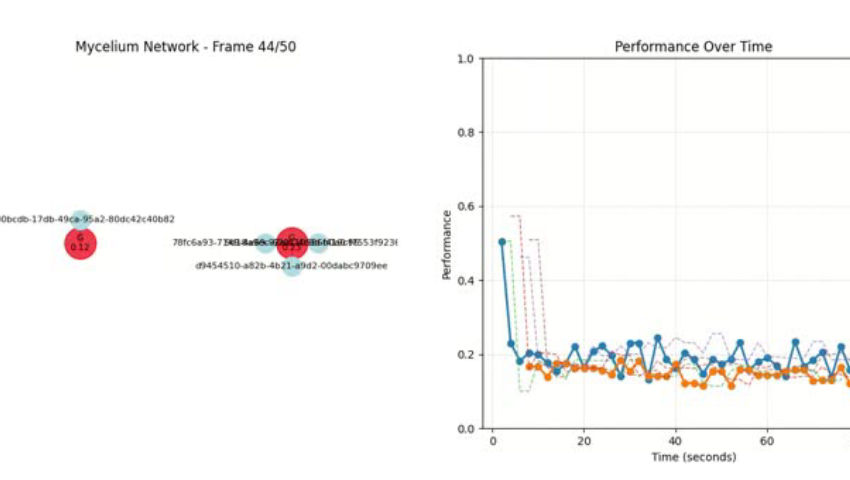

I did a prototype implementation of a “network of ML networks” - an internet-like protocol for federated learning where nodes can discover, join, and migrate between different learning groups based on performance metrics (Repo: https://github.com/bluebbberry/MyceliumNetServer). It’s build on Flower AI.

Want do you think of this? It could be used to build a Napster/BitTorrent-like app on this to collaboratively train and share arbitrary machine learning models with other people while keeping data private and only sharing gradients instead of whole models to save bandwidth. Would this be a good counter-weight for big AI companies or actually make things worse?

Would love to hear your opinion ;)

What would a use case look like?

I assume that the latency will make it impractical to train something that’s LLM-sized. But even for something small, wouldn’t a data center be more efficient?