- cross-posted to:

- technology@lemmy.world

- cross-posted to:

- technology@lemmy.world

Privacy stalwart Nicholas Merrill spent a decade fighting an FBI surveillance order. Now he wants to sell you phone service—without knowing almost anything about you.

Nicholas Merrill has spent his career fighting government surveillance. But he would really rather you didn’t call what he’s selling now a “burner phone.”

Yes, he dreams of a future where anyone in the US can get a working smartphone—complete with cellular coverage and data—without revealing their identity, even to the phone company. But to call such anonymous phones “burners” suggests that they’re for something illegal, shady, or at least subversive. The term calls to mind drug dealers or deep-throat confidential sources in parking garages.

With his new startup, Merrill says he instead wants to offer cellular service for your existing phone that makes near-total mobile privacy the permanent, boring default of daily life in the US. “We’re not looking to cater to people doing bad things,” says Merrill. “We’re trying to help people feel more comfortable living their normal lives, where they’re not doing anything wrong, and not feel watched and exploited by giant surveillance and data mining operations. I think it’s not controversial to say the vast majority of people want that.”

That’s the thinking behind Phreeli, the phone carrier startup Merrill launched today, designed to be the most privacy-focused cellular provider available to Americans. Phreeli, as in, “speak freely,” aims to give its user a different sort of privacy from the kind that can be had with end-to-end encrypted texting and calling tools like Signal or WhatsApp. Those apps hide the content of conversations, or even, in Signal’s case, metadata like the identities of who is talking to whom. Phreeli instead wants to offer actual anonymity. It can’t help government agencies or data brokers obtain users’ identifying information because it has almost none to share. The only piece of information the company records about its users when they sign up for a Phreeli phone number is, in fact, a mere ZIP code. That’s the minimum personal data Merrill has determined his company is legally required to keep about its customers for tax purposes.

By asking users for almost no identifiable information, Merrill wants to protect them from one of the most intractable privacy problems in modern technology: Despite whatever surveillance-resistant communications apps you might use, phone carriers will always know which of their customers’ phones are connecting to which cell towers and when. Carriers have frequently handed that information over to data brokers willing to pay for it—or any FBI or ICE agent that demands it with a court order

Merrill has some firsthand experience with those demands. Starting in 2004, he fought a landmark, decade-plus legal battle against the FBI and the Department of Justice. As the owner of an internet service provider in the post-9/11 era, Merrill had received a secret order from the bureau to hand over data on a particular user—and he refused. After that, he spent another 15 years building and managing the Calyx Institute, a nonprofit that offers privacy tools like a snooping-resistant version of Android and a free VPN that collects no logs of its users’ activities. “Nick is somebody who is extremely principled and willing to take a stand for his principles,” says Cindy Cohn, who as executive director of the Electronic Frontier Foundation has led the group’s own decades-long fight against government surveillance. “He’s careful and thoughtful, but also, at a certain level, kind of fearless.”

Nicholas Merrill with a copy of the National Security Letter he received from the FBI in 2004, ordering him to give up data on one of his customers. He refused, fought a decade-plus court battle—and won.

More recently, Merrill began to realize he had a chance to achieve a win against surveillance at a more fundamental level: by becoming the phone company. “I started to realize that if I controlled the mobile provider, there would be even more opportunities to create privacy for people,” Merrill says. “If we were able to set up our own network of cell towers globally, we can set the privacy policies of what those towers see and collect.”

Building or buying cell towers across the US for billions of dollars, of course, was not within the budget of Merrill’s dozen-person startup. So he’s created the next best thing: a so-called mobile virtual network operator, or MVNO, a kind of virtual phone carrier that pays one of the big, established ones—in Phreeli’s case, T-Mobile—to use its infrastructure.

The result is something like a cellular prophylactic. The towers are T-Mobile’s, but the contracts with users—and the decisions about what private data to require from them—are Phreeli’s. “You can’t control the towers. But what can you do?” he says. “You can separate the personally identifiable information of a person from their activities on the phone system.”

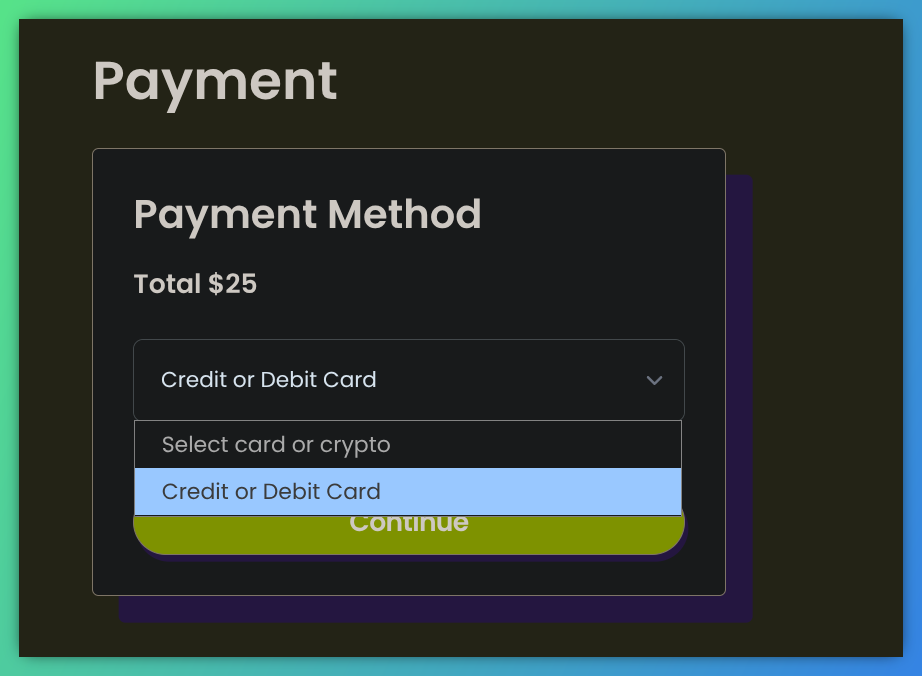

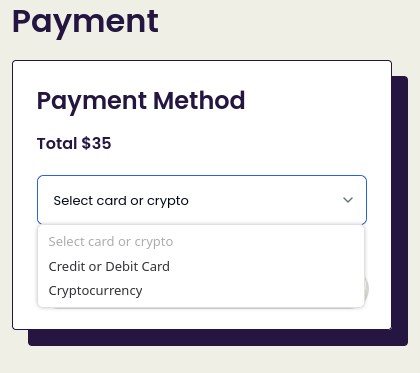

Signing up a customer for phone service without knowing their name is, surprisingly, legal in all 50 states, Merrill says. Anonymously accepting money from users—with payment options other than envelopes of cash—presents more technical challenges. To that end, Phreeli has implemented a new encryption system it calls Double-Blind Armadillo, based on cutting-edge cryptographic protocols known as zero-knowledge proofs. Through a kind of mathematical sleight of hand, those crypto functions are capable of tasks like confirming that a certain phone has had its monthly service paid for, but without keeping any record that links a specific credit card number to that phone. Phreeli users can also pay their bills (or rather, prepay them, since Phreeli has no way to track down anonymous users who owe them money) with tough-to-trace cryptocurrency like Zcash or Monero.

Phreeli users can, however, choose to set their own dials for secrecy versus convenience. If they offer an email address at signup, they can more easily recover their account if their phone is lost. To get a SIM card, they can give their mailing address—which Merrill says Phreeli will promptly delete after the SIM ships—or they can download the digital equivalent known as an eSIM, even, if they choose, from a site Phreeli will host on the Tor anonymity network.

Phreeli’s “armadillo” analogy—the animal also serves as the mascot in its logo—is meant to capture this sliding scale of privacy that Phreeli offers its users: Armadillos always have a layer of armor, but they can choose whether to expose their vulnerable underbelly or curl into a fully protected ball.

Even if users choose the less paranoid side of that spectrum of options, Merrill argues, his company will still be significantly less surveillance-friendly than existing phone companies, which have long represented one of the weakest links in the tech world’s privacy protections. All major US cellular carriers comply, for instance, with law enforcement surveillance orders like “tower dumps” that hand over data to the government on every phone that connected to a particular cell tower during a certain time. They’ve also happily, repeatedly handed over your data to corporate interests: Last year the Federal Communications Commission fined AT&T, Verizon, and T-Mobile nearly $200 million for selling users’ personal information, including their locations, to data brokers. (AT&T’s fine was later overturned by an appeals court ruling intended to limit the FCC’s enforcement powers.) Many data brokers in turn sell the information to federal agencies, including ICE and other parts of the DHS, offering an all-too-easy end run around restrictions on those agencies’ domestic spying.

Phreeli doesn’t promise to be a surveillance panacea. Even if your cellular carrier isn’t tying your movements to your identity, the operating system of whatever phone you sign up with might be. Even your mobile apps can track you.

But for a startup seeking to be the country’s most privacy-focused mobile carrier, the bar is low. “The goal of this phone company I’m starting is to be more private than the three biggest phone carriers in the US. That’s the promise we’re going to massively overdeliver on,” says Merrill. “I don’t think there’s any way we can mess that up.”

Merrill’s not-entirely-voluntary decision to spend the last 20-plus years as a privacy diehard began with three pages of paper that arrived at his office on a February day in New York in 2004. An FBI agent knocked on the door of his small internet service provider firm called Calyx, headquartered in a warehouse space a block from the Holland Tunnel in Manhattan. When Merrill answered, he found an older man with parted white hair, dressed in a trench coat like a comic book G-man, who handed him an envelope.

Merrill opened it and read the letter while the agent waited. The first and second paragraphs told him he was hereby ordered to hand over virtually all information he possessed for one of his customers, identified by their email address, explaining that this demand was authorized by a law he’d later learn was part of the Patriot Act. The third paragraph informed him he couldn’t tell anyone he’d even received this letter—a gag order.

Then the agent departed without answering any of Merrill’s questions. He was left to decide what to do, entirely alone.

Merrill was struck immediately by the fact that the letter had no signature from a judge. He had in fact been handed a so-called National Security Letter, or NSL, a rarely seen and highly controversial tool of the Bush administration that allowed the FBI to demand information without a warrant, so long as it was related to “national security.”

Calyx’s actual business, since he’d first launched the company in the early ’90s with a bank of modems in the nonfunctional fireplace of a New York apartment, had evolved into hosting the websites of big corporate customers like Mitsubishi and Ikea. But Merrill used that revenue stream to give pro bono or subsidized web hosting to nonprofit clients he supported like the Marijuana Policy Project and Indymedia—and to offer fast internet connections to a few friends and acquaintances like the one named in this surveillance order.

Merrill has never publicly revealed the identity of the NSL’s target, and he declined to share it with WIRED. But he knew this particular customer, and he certainly didn’t strike Merrill as a national security threat. If he were, Merrill thought, why not just get a warrant? The customer would later tell Merrill he had in fact been pressured by the FBI to become an informant—and had refused. The bureau, he told Merrill, had then retaliated by putting him on the no-fly list and pressuring employers not to hire him. (The FBI didn’t respond to WIRED’s request for comment on the case.)

Merrill immediately decided to risk disobeying the gag order—on pain of what consequences, he had no idea—and called his lawyer, who told him to go to the New York affiliate of the American Civil Liberties Union, which happened to be one of Calyx’s web-hosting clients. After a few minutes in a cab, Merrill was talking to a young attorney named Jameel Jaffer in the ACLU’s Financial District office. “I wish I could say that we reassured him with our expertise on the NSL statute, but that’s not how it went down,” Jaffer says. “We had never seen one of these before.”

Merrill, meanwhile, knew that every lawyer he showed the letter to might represent another count in his impending prosecution. “I was terrified,” he says. “I kind of assumed someone could just come to my place that night, throw a hood over my head, and drag me away.” Phreeli will use a novel encryption system called DoubleBlind Armadillo—based on cutting edge crypto protocols known as…

Phreeli will use a novel encryption system called Double-Blind Armadillo—based on cutting edge crypto protocols known as zero-knowledge proofs—to pull of tricks like accepting credit card payments from customers without keeping any record that ties that payment information to their particular phone.

Despite his fears, Merrill never complied with the FBI’s letter. Instead, he decided to fight its constitutionality in court, with the help of pro bono representation from the ACLU and later the Yale Media Freedom and Information Access Clinic. That fight would last 11 years and entirely commandeer his life.

Merrill and his lawyers argued that the NSL represented an unconstitutional search and a violation of his free-speech rights—and they won. But Congress only amended the NSL statute, leaving the provision about its gag order intact, and the legal battle dragged out for years longer. Even after the NSL was rescinded altogether, Merrill continued to fight for the right to talk about its existence. “This was a time when so many people in his position were essentially cowering under their desks. But he felt an obligation as a citizen to speak out about surveillance powers that he thought had gone too far,” says Jaffer, who represented Merrill for the first six years of that courtroom war. “He impressed me with his courage.”

Battling the FBI took over Merrill’s life to the degree that he eventually shut down his ISP for lack of time or will to run the business and instead took a series of IT jobs. “I felt too much weight on my shoulders,” he says. “I was just constantly on the phone with lawyers, and I was scared all the time.”

By 2010, Merrill had won the right to publicly name himself as the NSL’s recipient. By 2015 he’d beaten the gag order entirely and released the full letter with only the target’s name redacted. But Merrill and the ACLU never got the Supreme Court precedent they wanted from the case. Instead, the Patriot Act itself was amended to reign in NSLs’ unconstitutional powers.

In the meantime, those years of endless bureaucratic legal struggles had left Merrill disillusioned with judicial or even legislative action as a way to protect privacy. Instead, he decided to try a different approach. “The third way to fight surveillance is with technology,” he says. “That was my big realization.”

So, just after Merrill won the legal right to go public with his NSL battle in 2010, he founded the Calyx Institute, a nonprofit that shared a name with his old ISP but was instead focused on building free privacy tools and services. The privacy-focused version of Google’s Android OS it would develop, designed to strip out data-tracking tools and use Signal by default for calls and texts, would eventually have close to 100,000 users. It ran servers for anonymous, encrypted instant messaging over the chat protocol XMPP with around 300,000 users. The institute also offered a VPN service and ran servers that comprised part of the volunteer-based Tor anonymity network, tools that Merrill estimates were used by millions.

As he became a cause célèbre and then a standout activist in the digital privacy world over those years, Merrill says he started to become aware of the growing problem of untrustworthy cellular providers in an increasingly phone-dependent world. He’d sometimes come across anti-surveillance hard-liners determined to avoid giving any personal information to cellular carriers, who bought SIM cards with cash and signed up for prepaid plans with false names. Some even avoided cell service altogether, using phones they connected only to Wi-Fi. “Eventually those people never got invites to any parties,” Merrill says.

All these schemes, he knew, were legal enough. So why not a phone company that only collects minimal personal information—or none—from its normal, non-extremist customers? As early as 2019, he had already consulted with lawyers and incorporated Phreeli as a company. He decided on the for-profit startup route after learning that the 501c3 statute can’t apply to a telecom firm. Only last year, he finally raised $5 million, mostly from one angel investor. (Merrill declined to name the person. Naturally, they value their privacy.)

Building a system that could function like a normal phone company—and accept users’ payments like one—without storing virtually any identifying information on those customers presented a distinct challenge. To solve it, Merrill consulted with Zooko Wilcox, one of the creators of Zcash, perhaps the closest thing in the world to actual anonymous cryptocurrency. The Z in Zcash stands for “zero-knowledge proofs,” a relatively new form of crypto system that has allowed Zcash’s users to prove things (like who has paid whom) while keeping all information (like their identities, or even the amount of payments) fully encrypted.

For Phreeli, Wilcox suggested a related but slightly different system: so-called “zero-knowledge access passes.” Wilcox compares the system to people showing their driver’s license at the door of a club. “You’ve got to give your home address to the bouncer,” Wilcox says incredulously. The magical properties of zero knowledge proofs, he says, would allow you to generate an unforgeable crypto credential that proves you’re over 21 and then show that to the doorman without revealing your name, address, or even your age. “A process that previously required identification gets replaced by something that only requires authorization,” Wilcox says. “See the difference?”

The same trick will now let Phreeli users prove they’ve prepaid their phone bill without connecting their name, address, or any payment information to their phone records—even if they pay with a credit card. The result, Merrill says, will be a user experience for most customers that’s not very different from their existing phone carrier, but with a radically different level of data collection.

As for Wilcox, he’s long been one of that small group of privacy zealots who buys his SIM cards in cash with a fake name. But he hopes Phreeli will offer an easier path—not just for people like him, but for normies too.

“I don’t know of anybody who’s ever offered this credibly before,” says Wilcox. “Not the usual telecom-strip-mining-your-data phone, not a black-hoodie hacker phone, but a privacy-is-normal phone.”

Even so, enough tech companies have pitched privacy as a feature for their commercial product that jaded consumers may not buy into a for-profit telecom like Phreeli purporting to offer anonymity. But the EFF’s Cohn says that Merrill’s track record shows he’s not just using the fight against surveillance as a marketing gimmick to sell something. “Having watched Nick for a long time, it’s all a means to an end for him,” she says. “And the end is privacy for everyone.”

Merrill may not like the implications of describing Phreeli as a cellular carrier where every phone is a burner phone. But there’s little doubt that some of the company’s customers will use its privacy protections for crime—just as with every surveillance-resistant tool, from Signal to Tor to briefcases of cash.

Phreeli won’t, at least, offer a platform for spammers and robocallers, Merrill says. Even without knowing users’ identities, he says the company will block that kind of bad behavior by limiting how many calls and texts users are allowed, and banning users who appear to be gaming the system. “If people think this is going to be a safe haven for abusing the phone network, that’s not going to work,” Merrill says.

But some customers of his phone company will, to Merrill’s regret, do bad things, he says—just as they sometimes used to with pay phones, that anonymous, cash-based phone service that once existed on every block of American cities. “You put a quarter in, you didn’t need to identify yourself, and you could call whoever you wanted,” he reminisces. “And 99.9 percent of the time, people weren’t doing bad stuff.” The small minority who were, he argues, didn’t justify the involuntary societal slide into the cellular panopticon we all live in today, where a phone call not tied to freely traded data on the caller’s identity is a rare phenomenon.

“The pendulum has swung so far in favor of total information awareness,” says Merrill, using an intelligence term of the Bush administration whose surveillance order set him on this path 21 years ago. “Things that we used to be able to take for granted have slipped through our fingers.”

“Other phone companies are selling an apartment that comes with no curtains—where the windows are incompatible with curtains,” Merrill says. “We’re trying to say, no, curtains are normal. Privacy is normal.”

I just searched for them and all that came up was the new articles all released within 24h…

Honeypot?

Nick Merrill is freaking legit. He was behind Calyx before, one of the few people who’s challenged, and won, an NSL. I can’t see him flipping, ever.

Had no idea this was him. Explains the decision to back away from calyxos and do this then.

Great stuff.

It says this was created by the founder of the Calyx foundation, who is a reputable guy. So unless they’re just lying, I think it’s legit. Still might give it a few days or weeks to see what shakes out. Probably just paid marketing.

Yeah was sort of wondering about that too…

No. It’s fucking Merrill.

https://en.wikipedia.org/wiki/American_Civil_Liberties_Union_v._Ashcroft

The Big Story is exclusive to subscribers

Just $4/month bro, $4 isn’t much bro, it’s just another small subscription bro.

Sorry, keep forgetting I have Bypass Paywalls on.

How

I was faced with a dono splash page as I clicked into this article, lol. Not a paywall, but still a bit ironic!

Holy wall of text Batman! I’m lowkey interested in the service, but uhhhh…

Well when you wanna know more you can go back and read it

It’s the text extracted from the linked article

Get out.

Thanks op.

Attention span. Get one.

Removed by mod

I actually do intend to be impolite. Stop copying and pasting bullshit AI reposes. That ziponlymobile.com isn’t even a real url. Typical ChatGPT slop.

I’m sorry, I truly do not intend to be impolite and I didn’t downvote you, but I think people can ask AI for a summary if they want to themselves.

Sorry again. I just really don’t like AI, and my expectation of a social media website is for it to be about human interactions. We can talk with AI anytime we want, what we’re lacking is pure human communication.

Agree, but such a brick of the posted text also don’t make easy a good conversation, in this case a summary can be helpfull knowing what is about.

But that ‘brick’ of the posted text is just the article that is linked. So if we are commenting under a post dedicated to the article it would stand to reason that we read the article itself, would you not agree?

a summary can be helpfull

No. LLMs can’t reliably summarize without inserting made-up things, which your now-deleted comment (which can still be read in the modlog here) is a great example of. I’m not going to waste my time reading the whole thing to see how much is right or wrong but it literally fabricated a nonexistent URL 😂

Please don’t ever post an LLM summary again.

deleted by creator

What the fuck. Why

Lmao it just…made up a website out of thin air.

Everyone, please report this comment to the mods

oh it’s nick from Calyx 😊

Is that good or bad?

That’s very good. https://en.wikipedia.org/wiki/Nicholas_Merrill

It’s a good thing! he genuinely cares about user privacy. The Wikipedia entry had some info worth reading

That’s very good.

And what a cute favicon!

TLDR; Nicholas Merrill, a well known privacy activist, launched Phreeli, a phone service that lets you use mobile data and calls without giving your identity. It runs on T Mobiles network but only keeps a ZIP code and uses zero knowledge crypto so even payments are not linked to you. Merrill spent 10 years fighting the FBI over surveillance and now wants to make privacy simple and normal for everyone.

Removed by mod

A few things. If you sign up, don’t then go use the number with things that associate it to your real identity like a bank account or credit card. Also, if you’ve already used your phone with a provider that has your real name, then it’s compromised because you could be linked by the IMEI. Get a fresh phone that you’ve never linked to your identity before. Also, don’t transfer your number to this service. Get a new number provided by them. Additionally, pay with cryptocurrency.

This is all if you want to stay truly anonymous with no traces back to you.

You don’t even need a zipcode if you use https://silent.link/ then you can pay with whatever crypto and have an esim where the balance never expires and it works in most of the world. I’ve used it a few months and it’s pretty good if you don’t need a phone number.

How does an esim work with no number? Data only?

Yes, suoer common travwl esims sans #

This, and because there’s no number it’s easier for them to not have KYC.

Interesting because the article says the ZIP code is required for tax purposes

Maybe the owner is outside of the US, maybe it’s OK?

Phreeli users can also pay their bills with tough-to-trace cryptocurrency like Zcash or Monero.

Lies. I did this so you don’t have to.

That’s just on the $25 plan because it’s auto-pay only. The other plans accept crypto.

How convenient…

I think that’s the point. You can trade some privacy for convenience if you insist.

Sounds like you’re trading money for privacy. Why isn’t it available on the $25 tier?

because it’s auto-pay only

deleted by creator

Yes I did read that the first time, but it doesn’t explain anything.

Good point. Many companies offer discounts for crypto. It’s disappointing to see a crypto surcharge here :(

A

n autopay discountfee for not using autopay is the standard in US cellular.

Okay I looked over their stuff, a couple thoughts:

I want them to be more clear in their privacy policy about what exactly they can and would reveal for a court order, what their screening process is for those orders, under what conditions they would fight one and if they will reveal anything outside the context of a full court order.

Reason: this is one of your biggest areas of vulnerability when signing up for a phone plan.

The lexipol leaks showed that many police departments use phone information requests so much that they include a set of request forms (typically one for each carrier) in the appendix of their operations manuals. Frequently the forms are the only data request tool in that appendix.

If you happened to have a call with someone who then did something Cool™ and got picked up, expect the detective to have your name and address on a post-it on their desk by the next morning. If you talked to them on some online chat platform they’ll send a court order to that platform for your IP then do the same to your carrier to unmask your identity.

Yes, if you were also sufficiently Cool™ they’ll start doing more invasive things like directly tracking your phone via tower dumps, but that’s a significant escalation in time and effort. If things got Cool™ enough that this is a concern though, it may buy you time to get a new phone if you live in an area dense enough for that to not be immediately identifying.

Also: I suspect the zip code is completely unverifiable so put whatever you want in there, basically pick your favorite sales tax rate.

Cool™Cool™Cool™

deleted by creator

5G has exceptional location tracking accuracy and precision without the use of any GPS

That’s the question, what are they actually providing to warrants. You don’t need to provide a name to be able to identify someone. Do they provide logs or data that could be uniquely identifying before the police pull a tower dump? Who knows…

Very impressive.

When will this service be forced to change or shut down? I think five years. Possibly less if a major case hits the news where a bad actor used the service.

seems like a boon to swatters and the shitbags of the world… sure, privacy minded people, ICE trackers etc., yeah, but also… the shitbags…

Verbose

Wait, they ask for your details when setting up a phone in America?

I thought y’all lived in the land of the free!

The most I’ve ever been asked for to setup a phone is my bank details, and that’s it, so they can setup direct debit for my contract

Every EU phone number has to be connected to an identifiable person or company.

By name, not by anything else

Yes, by name. And that name has to be verified. They know everything else by default.

They still think I live at my parents house even though I moved out 25 years ago, it’s wildly inaccurate and easy to circumvent

ironically that is the last thing i would want to give to a phone company

The ability to pay money for your contract?

Edit: they only ask for that if on Contract, if pay-as-you-go they ask for no details at all

I like the ability to pay, I just don’t want to allow them access or even knowledge of my bank account.

A direct debit is a contractual agreement, they have zero access to the bank account, just the unique identification number and an automated system that requests money from that unique identifier once per month.

And that if there’s no money in the account, they don’t take you into credit, but instead just pause service until you pay

Depends on where you live of course. I always find it very disconcernibg linking bank accounts even I countries where it should be ok. The fuck ups are way too many for me. I don’t want any of that.

These services usually have the ability to debit whatever your bill is, and then suddenly their system fucks up, or you get hacked and someone commits fraud, and before you know it a $5000 payment comes out of your account instead of the expected $30.00.

It’s better to have that set up on a credit card in case something happens and you get a much better chance to dispute it.

That’s literally impossible, it’s not how it works

At the very least it’s literally impossible in UK and EU.

The system isn’t actually taking any money from you at all, it’s merely sending requests to the bank to ask for the money.

Some banks automatically will go “okay!”, some need human confirmation for every transaction, ALL need human confirmation for any transactions over £200 (by law)

That’s definitely a UK/EU thing then. If you get a $5000 cellphone bill in NA because someone did long distance fraud and you have pre authorized debits set up, $5000 is coming out of your account in Canada and USA.

Edit: assuming you have 5k and or have overdraft on the account. Not sure what happens if you have less than 5k and no overdraft. Like I don’t know if it’d take you to $0, or fail and charge you a insufficient fund fee.

Why?

Because they misunderstand how banking works

I had to give a fingerprint and a picture of me holding my passport, plus copy of the passport to get a Sim in Peru…plus a half hour of my life in the process

Jesus, that’s fucked

- cries in canada *

Is there a reason they used an image of a phone with a screen smeared with what looks like rendered goose fat?

i think it’s fingerprints…?

Like a pun on data fingerprinting. But that’s not exactly what this service protects against.

I’ve bought and activated several prepaid phones over the years, paid cash, obviously pseudonymous name, no ID. Last was several years ago, idk if you can still do that. When I did it, it was at phone stores and they told me it was ok.

That said, phones will never be private. There’s too much tracking and logging. People can’t accept that, because they love their phones too much. But you have to make a choice. Anonymous carriers are of almost no help because all the stuff about deanonymizing database records applies even more to phones. At best they help stay away from some marketing crap and stuff on that level. Government surveillance will see right through it.

Its still possible some places, but a lot of stores have cracked down on it

It’s not possible in any corporate stores purely for the fact that they use facial recognition extensively. Doesn’t matter if you can technically get away with paying cash and using a fake name. You’re being tracked the moment their cameras can see you and they have extensive profiles on people even if you’ve never used a debit card, given them an email, or given them a phone number.

Also, the ones I’ve seen in stores lately hare only the trial offers that are only good for a couple days and have to be “replenished” with an online account to stay functional for more than a couple days. Mint wouldn’t even activate initially with an email alias. I called support and they said “we can’t activate it with that email, we need your real email.” I then told them no worries, I’d just return it to best buy. Then they “found a way” to activate it, but I would have needed to give a credit card if I wanted it to stay active more than the 3 days. Best buy didn’t carry any longer duration prepaid card in the stores.

Pay some guy to go in and buy them.

Or have them mailed to someone you know

Or use prepaid esim, paid with prepaid debit card

Prepaid sims exist, no?

You can still do it.

Just buy from a heavily trafficked grocery store. Arrive by foot. Wear a good covid mask. Pay with cash. Wait a few weeks after purchase before use.

Before you turn it on, cover all cameras with tape and disable the microphone if you can (or plug it with a cutoff headphone jack).

Cut a piece of paper and wedge it between the battery leads. Only pull it out and turn it on in a public place far from your home. And you have to burn the phone after every service you activate.