Large Language Models don’t reason either. The idea that Artificial General Intelligence will come from LLMs is pure fallacy to drive stock price. When it is eventually announced that some company claims to have achieved AGI via LLMs, it will just be another arbitrary milestone that moves the goalposts more.

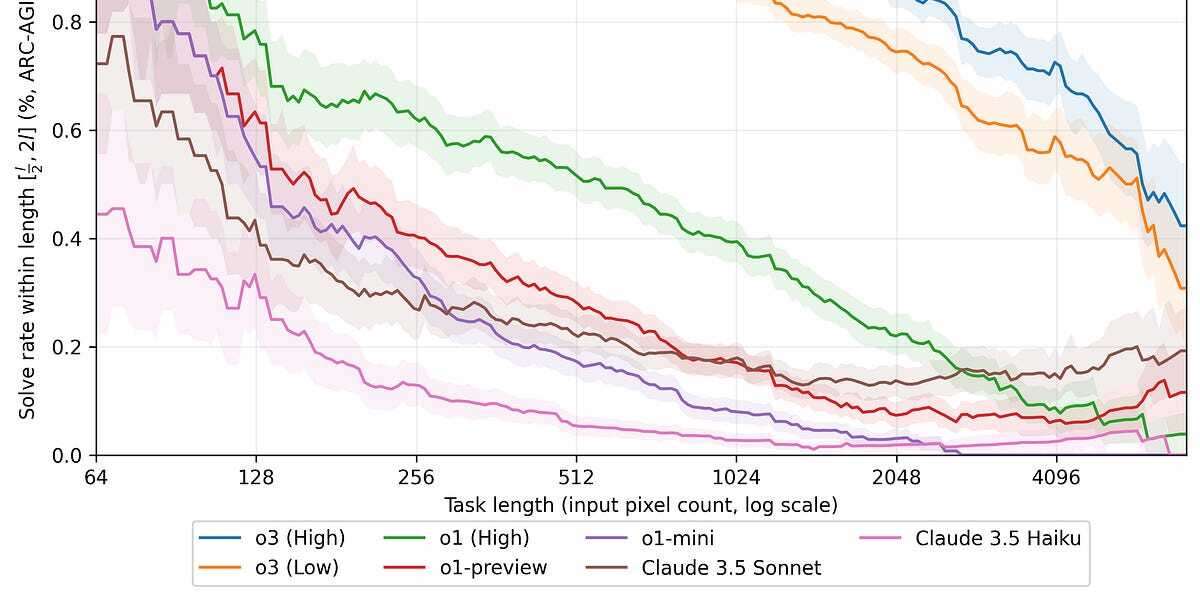

Interesting conclusion, LLMs are inherently 1D in nature, and ARC is a 2D task. LLMs are able to emulate 2D reasoning for sufficiently small tasks, but suffer greatly as the size of the task increases. This is like asking humans to solve 4D problems.

This is probably a fundamental limitation in LLM architecture and will need to be solved someday, presumably by something completely different.

I’ve beaten Portal and Portal 2, have I not solved 4D problems?

Not really, portals give you shortcuts in 3D space, they don’t allow you to interact with a whole different dimension. If you have Minecraft there’s a really nice custom map called “The Hypercube” which sorts of emulates a 4th dimension, it felt much more confusing than Portal (2) for me.