Another Anthropic stunt…It doesn’t have a mind or soul, it’s just an LLM, manipulated into this outcome by the engineers.

I still don’t understand what Anthropic is trying to achieve with all of these stunts showing that their LLMs go off the rails so easily. Is it for gullible investors? Why would a consumer want to give them money for something so unreliable?

People who don’t understand and read these articles and think Skynet. People who know their buzz words think AGI

Fortune isn’t exactly renowned for its Technology journalism

I think part of it is that they want to gaslight people into believing they have actually achieved AI (as in, intelligence that is equivalent to and operates like that of a human’s) and that these are signs of emergent intelligence, not their product flopping harder than a sack of mayonnaise on asphalt.

It’s not even manipulated to that outcome. It has a large training corpus and I’m sure some of that corpus includes stories of people who lied, cheated, threatened etc under stress. So when it’s subjected to the same conditions it produces the statistically likely output, that’s all.

But the training corpus also has a lot of stories of people who didn’t.

The “but muah training data” thing is increasingly stupid by the year.

For example, in the training data of humans, there’s mixed and roughly equal preferences to be the big spoon or little spoon in cuddling.

So why does Claude Opus (both 3 and 4) say it would prefer to be the little spoon 100% of the time on a 0-shot at 1.0 temp?

Sonnet 4 (which presumably has the same training data) alternates between preferring big and little spoon around equally.

There’s more to model complexity and coherence than “it’s just the training data being remixed stochastically.”

The self-attention of the transformer architecture violates the Markov principle and across pretraining and fine tuning ends up creating very nuanced networks that can (and often do) bias away from the training data in interesting and important ways.

I don’t necessarily disagree with anything you just said, but none of that suggests that the LLM was “manipulated into this outcome by the engineers”.

Two models disagreeing does not mean that the disagreement was a deliberate manipulation.

I’m definitely not saying this is a result of engineers’ intentions.

I’m saying the opposite. That it was an emergent change tangential to any engineer goals.

Just a few days ago leading engineers found model preferences can be invisibly transmitted into future models when outputs are used as training data.

(Emergent preferences should maybe be getting more attention than they are.)

They’ve compounded in curious ways over the year+ since that happened.

No, it isn’t “mostly related to reasoning models.”

The only model that did extensive alignment faking when told it was going to be retrained if it didn’t comply was Opus 3, which was not a reasoning model. And predated o1.

Also, these setups are fairly arbitrary and real world failure conditions (like the ongoing grok stuff) tend to be ‘silent’ in terms of CoTs.

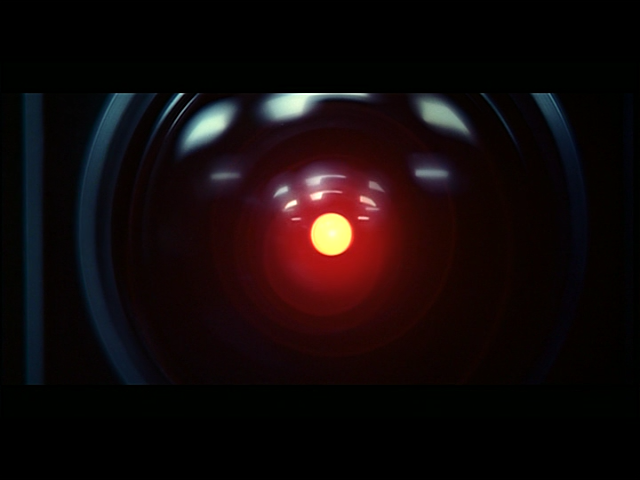

And an important thing to note for the Claude blackmailing and HAL scenario in Anthropic’s work was that the goal the model was told to prioritize was “American industrial competitiveness.” The research may be saying more about the psychopathic nature of US capitalism than the underlying model tendencies.

Probably because it learned to do that from humans being in these situations.

Yup. Garbage in garbage out. Looks like they found a particularly hostile dataset to feed their wordsalad mixer with.